Python for Scientific Computing

Attending the course 25-27 November, 2025?

See the course page here and watch at https://twitch.tv/coderefinery. Whether you are or aren’t, the course material is below. Videos will appear in this playlist (Last year’s videos: playlist).

Python is a modern, object-oriented programming language, which has become popular in several areas of software development. This course discusses how Python can be utilized in scientific computing. The course starts by introducing some of the main Python tools for computing: Jupyter for interactive analysis, NumPy and SciPy for numerical analysis, Matplotlib for visualization, and so on. In addition, it talks about how python is used: related scientific libraries, reproducibility, and the broader ecosystem of science in Python, because your work is more than the raw code you write.

This course (like any course) can’t teach you Python… it can show your some examples, let you see how experts do things, and prepare you to learn yourself as you need to.

Prerequisites

Knowing basic Python syntax. We assume that you can do some Python programming, but not much more that that. We don’t cover standard Python programming. Here a short course on basic Python syntax, with further references.

Watch or read the command line crash course, if you aren’t familiar.

You should be able to use a text editor to edit files some.

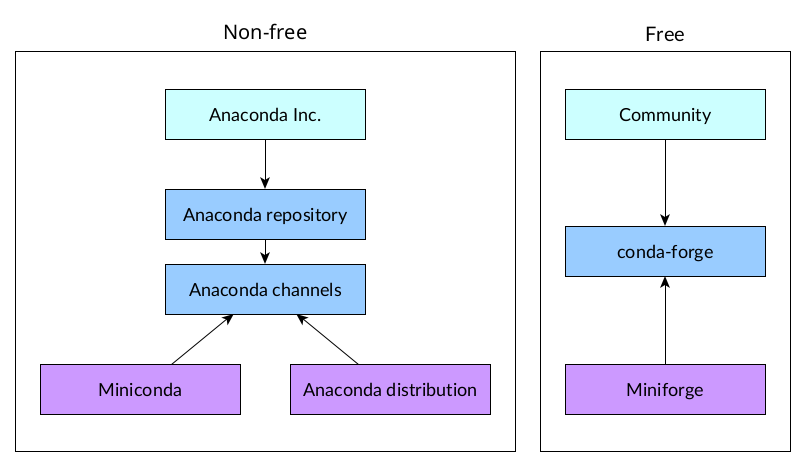

The software installation described below (basically, anaconda).

These are not prerequisites:

Any external libraries, e.g. numpy

Knowing how to make scripts or use Jupyter

Videos and archived Q&A

Videos and material from past instances:

(prereq) |

|

30 min |

|

60 min |

|

60 min |

|

30 min |

|

60 min |

|

60 min |

|

30 min |

|

60 min |

|

40 min |

|

20 min |

|

30 min |

|

15 min |

|

30 min |

|

45 min |

|

45 min |

|

30 min |

|

60 min |

|

30 min |

Introduction to Python

Questions

What are the basic blocks of Python language?

How are functions and classes defined in Python?

Objectives

Get a very short introduction to Python types and syntax

Be able to follow the rest of the examples in the course, even if you don’t understand everything perfectly.

We expect everyone to be able to know the following basic material to follow the course (though it is not everything you need to know about Python).

If you are not familiar with Python, here is a very short introduction. It will not be enough to do everything in this course, but you will be able to follow along a bit more than you would otherwise.

See also

This page contains an overview of the basics of Python. You can also refer to This Python overview from a different lesson which is slightly more engaging.

Scalars

Scalar types, that is, single elements of various types:

i = 42 # integer

i = 2**77 # Integers have arbitrary precision

g = 3.14 # floating point number

c = 2 - 3j # Complex number

b = True # boolean

s = "Hello!" # String (Unicode)

q = b'Hello' # bytes (8-bit values)

Collections

Collections are data structures capable of storing multiple values.

l = [1, 2, 3] # list

l[1] # lists are indexed by int

l[1] = True # list elements can be any type

d = {"Janne": 123, "Richard": 456} # dictionary

d["Janne"]

s = set(("apple", "cherry", "banana", "apple")) # Set of unique values

s

Control structures

Python has the usual control structures, that is conditional statements and loops. For example, the The if statement statement:

x = 2

if x == 3:

print('x is 3')

elif x == 2:

print('x is 2')

else:

print('x is something else')

While loops loop until some condition is met:

x = 0

while x < 42:

print('x is ', x)

x += 0.2

For loops loop over some collection of values:

xs = [1, 2, 3, 4]

for x in xs:

print(x)

Often you want to loop over a sequence of integers, in that case the

range function is useful:

for x in range(9):

print(x)

Another common need is to iterate over a collection, but at the same

time also have an index number. For this there is the enumerate()

function:

xs = [1, 'hello', 'world']

for ii, x in enumerate(xs):

print(ii, x)

Functions and classes

Python functions are defined by the Function definitions keyword. They take a number of arguments, and return a number of return values.

def hello(name):

"""Say hello to the person given by the argument"""

print('Hello', name)

return 'Hello ' + name

hello("Anne")

Classes are defined by the Class definitions keyword:

class Hello:

def __init__(self, name):

self._name = name

def say(self):

print('Hello', self._name)

h = Hello("Richard")

h.say()

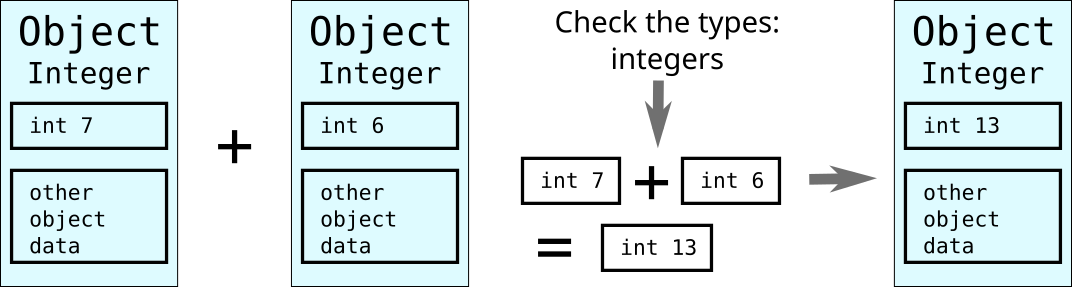

Python type system

Python is strongly and dynamically typed.

Strong here means, roughly, that it’s not possible to circumvent the type system (at least, not easily, and not without invoking undefined behavior).

x = 42

type(x)

x + "hello"

Dynamic typing means that types are determined at runtime, and a variable can be redefined to refer to an instance of another type:

x = 42

x = "hello"

Jargon: Types are associated with rvalues, not lvalues. In statically typed language, types are associated with lvalues, and are (typically) reified during compilation.

??? (lesson here)

Keypoints

Python offers a nice set of basic types as many other programming languages

Python is strongly typed and dynamically typed

Jupyter

Questions

What is the purpose of a “Computational narrative”?

What role does Jupyter play in development?

When is Jupyter not a good tool?

Objectives

This part will be too easy for some people, and slow for others. Still, we need to take some time to get everyone on the same page.

Be able to use Jupyter to run examples for the rest of the course.

Be able to run Jupyter in a directory do your own work.

You won’t be a Jupyter expert after this, but should be able to do the rest of the course.

What is Jupyter?

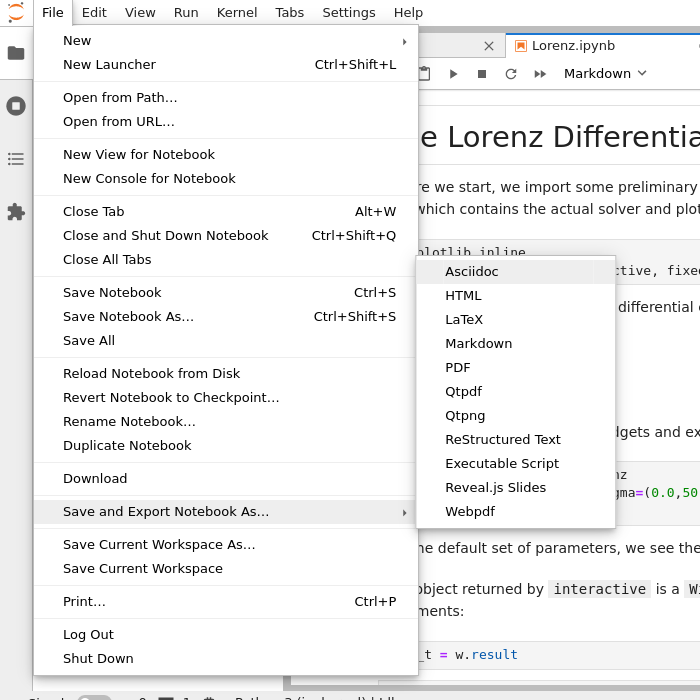

Jupyter is a web-based interactive computing system. It is most well known for having the notebook file format and Jupyter Notebook / Jupyter Lab. A notebook format contains both the input and the output of the code along documentation, all interleaved to create what is called a computational narrative.

Jupyter is good for data exploration and interactive work.

We use Jupyter a lot in this course because it is a good way that everyone can follow along, and minimizes the differences between operating systems.

Getting started with Jupyter

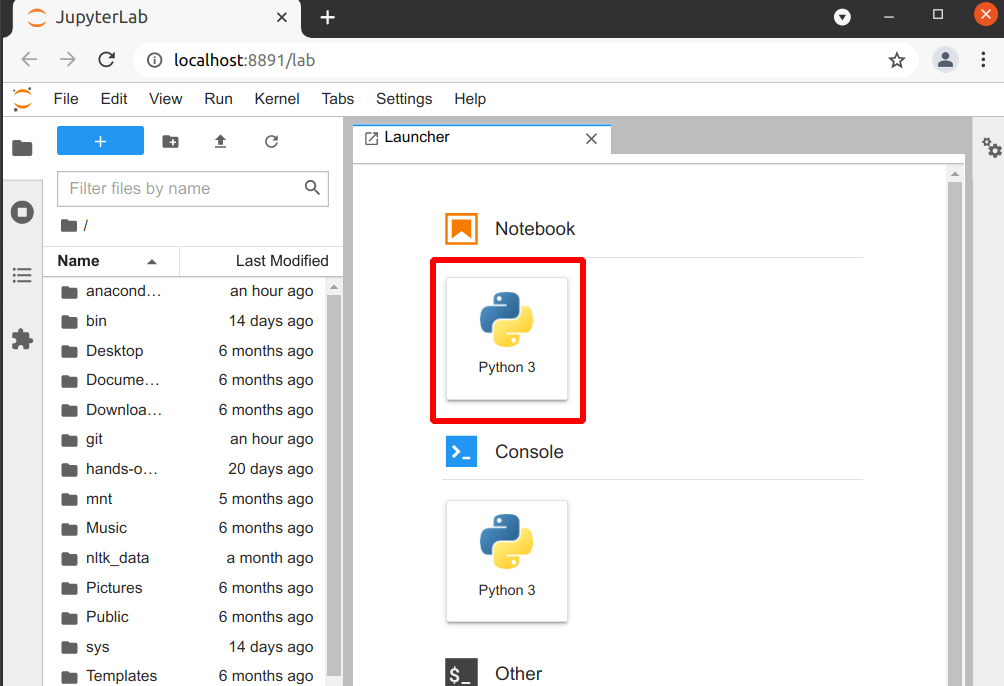

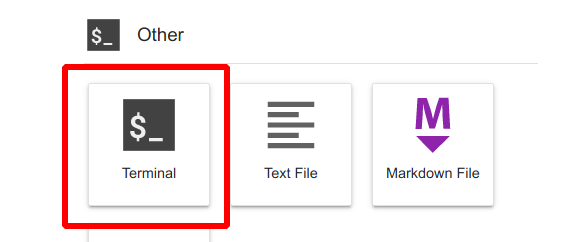

Start JupyterLab: there are different ways, depending on how you installed it. See the installation instructions. If JupyterLab isn’t working yet, you have some time to try to follow the installation instructions now.

This is the command line method we went though in our installation instructions.

$ source ~/miniforge3/bin/activate

$ conda activate python-for-scicomp

$ jupyter-lab

Start the Miniforge Prompt applicaiton first, then:

$ conda activate python-for-scicomp

$ jupyter-lab

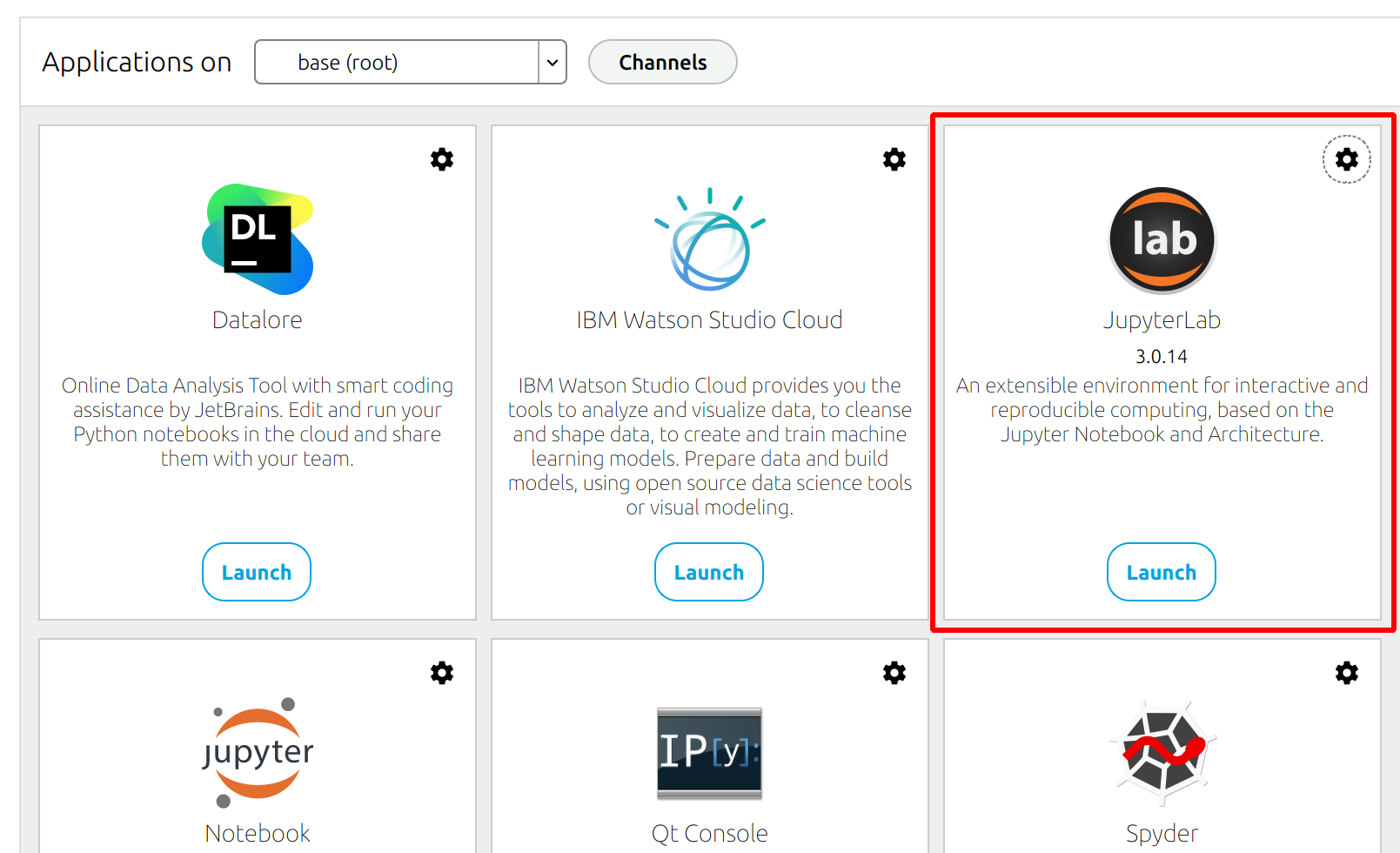

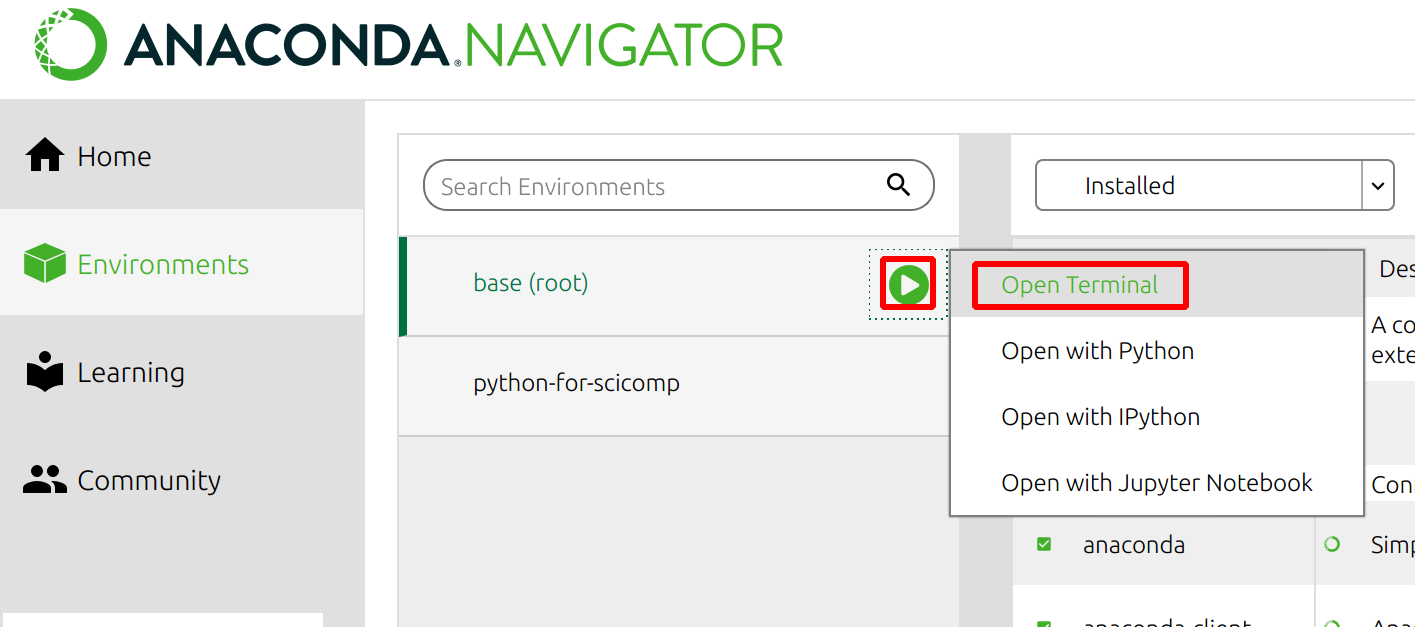

If you install the full Anaconda distribution, start the Anaconda Navigator. Make sure the python-for-scicomp environment is selected and you can start JupyterLab.

If you have installed Python and the workshop’s requiremnts some other way, you hopefully know how to activate that Python and the Python for SciComp software environment and start JupyterLab.

If not, see the installation instructions.

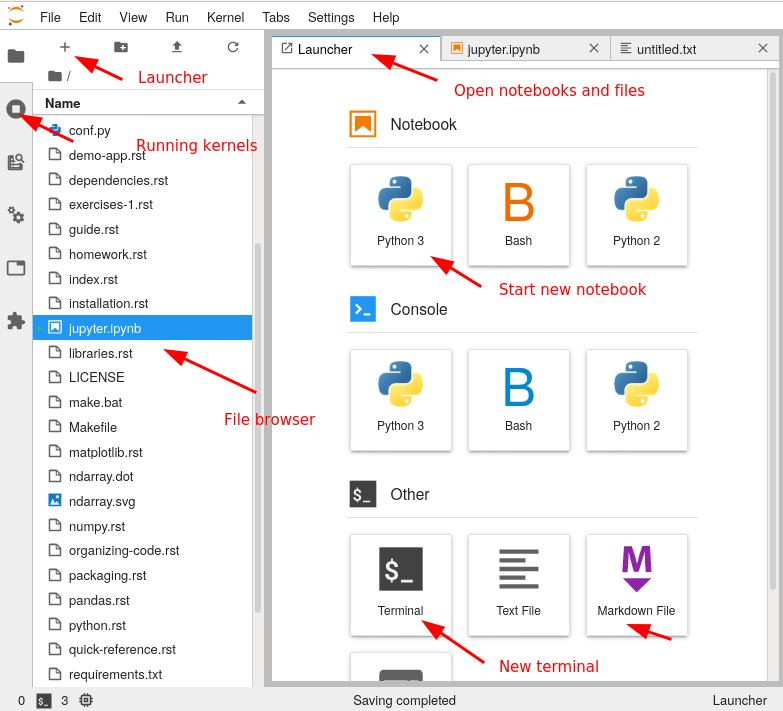

For practical purposes, JupyterLab is an integrated development environment that combines file browsing, notebooks, and code editing. There are many extensions that let you do whatever you may need.

Here, we see a tour of the JupyterLab interface:

Exercises 1

Exercises: Jupyter-1

If you aren’t set up with JupyterLab yet or these things don’t work, use this time to see the installation instructions and ask us any questions you may have.

Start Jupyter in the directory you want to use for this course.

If you are using Miniforge from the command line, you can navigate with

cdto a directory of your choice.If you are starting from the Anaconda Navigator, change to the directory you want to use.

Create a Python 3 notebook file. Save it. In the next section, you will add stuff to it.

(optional, but will be done in future lessons) Explore the file browser, try making some non-notebook text/py/md files and get used to that.

(optional, advanced) Look at the notebook file in a text editor. How does it work?

If everything works for you, this will end very quickly. You can begin reading the next sections independently.

Running code in Jupyter

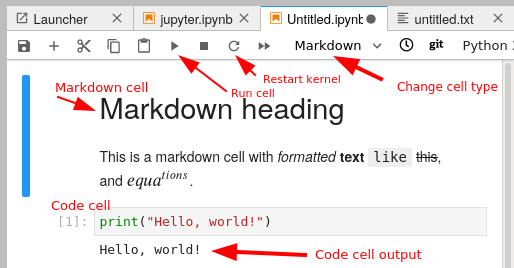

A notebook is divided into cells. Each cell has some input, and when it is executed an output appears right below it.

There are different types of cells: primarily code cells and markdown cells. You can switch between them with the menu bar above. Code cells run whatever language your notebook uses. Markdown is a lightweight way of giving style to text - you can check out this reference. For example the previous sentence is:

Markdown is a lightweight way of giving *style* to `text` - you

can check out [this reference](https://commonmark.org/help/).

When using keyboard shortcuts, you can switch between edit mode and command mode with Enter and Esc.

You enter code in a cell, and push the run button to run it. There are also some important shortcut keys:

Ctrl-Enter: Run cellShift-Enter: Run cell and select cell belowAlt-Enter: Run cell and insert new cell belowa/b: insert new cell above/belowm/y: markdown cell / code cellx: cut cellc: copy cellv: paste celld, d: delete cell

Now, let’s look at some code samples:

for i in range(3):

print(i)

0

1

2

print(sum(range(5)))

10

By convention, if the last thing in a cell is an object, that object gets printed:

sum(range(5))

sum(range(10))

45

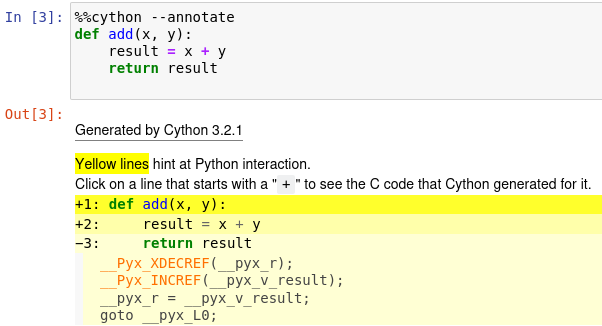

In addition to raw cells, there are magics, which exist outside of Python. They are a property of the runtime itself (in Python’s case, they come from IPython. For example, the following cell magic %%timeit will use the timeit module to time a cell by running it multiple times):

%%timeit

for x in range(1000000):

x**2

54.1 ms ± 993 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

Another example is %%bash which will turn the cell into a shell script (This will only work on operating systems with the Bash shell installed - MacOS and Linux at least):

%%bash

for x in $(seq 3) ; do

echo $x

done

1

2

3

A cell magic starts with

%%, goes on the first line of a cell, and applies to the whole cellA line magic starts with

%, goes on any line, and applies to that line.

Exercises 2

Exercises: Jupyter-2

Run some trivial code, such as

print(1).Run some slightly less trivial code, like print out the first ten Fibonacci numbers.

Make a Markdown cell above your code cell and give it a title and some description of your function. Use the reference to add a heading, bullet list, and some (bold, italic, or inline code)

Use the %%timeit magic function to time your Fibonacci function.

Again using

%%timeit, figure out the fastest way to sum the numbers 0 to 1000000.Once you are done, close your notebooks and other tabs you don’t need. Check the running sessions (hint: thin left sidebar) and shut down these kernels.

Solutions: Jupyter-2

–

Simple fibonacci code

a, b = 0, 1 for i in range(10): print(a) a, b = b, a+b

Markdown description

# Fibonacci * Start with two variables `a` and `b` * Repeat 10 times * Print old `a`, then increment both * Makes use of the Python *tuple assignment*: `a, b = new_a, new_b`

In this case, the print() statements get out of hand, so we comment that out. In general, writing output usually takes a lot of time reletive to the computation, so we don’t want to time that (unless output is the main point of the code, then we do have to time it!

%%timeit a, b = 0, 1 for i in range(10): #print(a) a, b = b, a+b

395 ns ± 10.2 ns per loop (mean ± std. dev. of 7 runs, 1000000 loops each)

–

–

Why Jupyter?

Being able to edit, check, re-edit quickly is great for prototyping and testing new ideas

Tends to be best either at the very beginning (getting started) or data analysis/plotting phases.

You can make a complete story - in one place. No more having code, figures, and description in different places.

Instead of sending plots to your advisor, send plots, the text there, and possibility of checking the code, too.

Notebook as an interactive publication itself - for example the discovery of gravitational waves data is released as a notebook.

Jupyter Notebooks display on Github - low-barrier way to share your analysis.

Teaching - great for getting difficult software distribution out of the way.

Why not Jupyter?

Jupyter is great for many things, but there are some problems if not used well:

They don’t promote modularity, and once you get started in a notebook it can be hard to migrate to modules.

They are difficult to test. There are things to run notebooks as unit tests like nbval, but it’s not perfect.

Notebooks can be version controlled (nbdime helps with that), but there are still limitations.

You can change code after you run it and run code out of order. This can make debugging hard and results irreproducible if you aren’t careful.

Notebooks aren’t named by default and tend to acquire a bunch of unrelated stuff. Be careful with organization!

Once lots of code is in notebooks, it can be hard to change to proper programs that can be scripted.

You can read more about these downsides https://scicomp.aalto.fi/scicomp/jupyter-pitfalls/.

But these downsides aren’t specific to Jupyter! They can easily happen in other sources, too. By studying these, you can make any code better, and find the right balance for what you do.

Exercises 3

Exercises: Jupyter-3

(optional) Discuss the following in groups:

Have any of you used Jupyter in a way that became impossible to maintain: too many files, code all spread out, not able to find your code and run it in the right order. How did you solve that?

On the other hand, what are your successes with Jupyter?

How can you prevent these problems by better development strategies?

See also

The CodeRefinery Jupyter lesson has much more, and the source of some of the content above.

Keypoints

Jupyter is powerful and can be used for interactive work

… but not the end solution when you need to scale up.

NumPy

Questions

Why use NumPy instead of pure python?

How to use basic NumPy?

What is vectorization?

Objectives

Understand the Numpy array object

Be able to use basic NumPy functionality

Understand enough of NumPy to seach for answers to the rest of your questions ;)

We expect most people to be able to do all the basic exercises here. It is probably quite easy for many people; we have advanced exercises at the end in that case.

NumPy is the most used library for scientific computing. Even if you are not using it directly, chances are high that some library uses it in the background. NumPy provides the high-performance multidimensional array object and tools to use it.

What is an array?

So, we already know about python lists, and that we can put different types of

data in the same list. For example, consider

[1, 2.5, 'asdf', False, [1.5, True]].

This is a Python list but it has different types for every element.

This makes them very flexible, but this comes at a cost: if we want to do

something to each element in the list, for example “add 1”, we need to consider

each element one-by-one, because “add 1” means something different if the item

is a number then when it is a string, or a sub-list. In scientific usage, we

want to be able to quickly perform operations on large groups of elements at

once, which is what NumPy arrays are optimized for.

An array is a ‘grid’ of values, with all the same type. It is indexed by tuples of non negative indices and provides the framework for multiple dimensions. An array has:

dtype - data type. Arrays always contain one type

shape - shape of the data, for example

3×2or3×2×500or even500(one dimensional) or()(zero dimensional).data- raw data storage in memory. This can be passed to C or Fortran code for efficient calculations.

To test the performance of pure Python vs NumPy we can write in our jupyter notebook:

Create one list and one ‘empty’ list, to store the result in

a = list(range(10000))

b = [ 0 ] * 10000

In a new cell starting with %%timeit, loop through the list a and fill the second list b with a squared

%%timeit

for i in range(len(a)):

b[i] = a[i]**2

That looks and feels quite fast. But let’s take a look at how NumPy performs for the same task.

So for the NumPy example, create one array and one ‘empty’ array to store the result in

import numpy as np

a = np.arange(10000)

b = np.zeros(10000)

In a new cell starting with %%timeit, fill b with a squared

%%timeit

b = a ** 2

We see that compared to working with numpy arrays, working with traditional python lists is actually slow.

Creating arrays

There are different ways of creating arrays (numpy.array(), numpy.ndarray.shape, numpy.ndarray.size):

a = np.array([1,2,3]) # 1-dimensional array (rank 1)

b = np.array([[1,2,3],[4,5,6]]) # 2-dimensional array (rank 2)

b.shape # the shape (rows,columns)

b.size # number of elements

In addition to above ways of creating arrays, there are many other ways of creating arrays depending on content (numpy.zeros(), numpy.ones(), numpy.full(), numpy.eye(), numpy.arange(), numpy.linspace()):

np.zeros((2, 3)) # 2x3 array with all elements 0

np.ones((1,2)) # 1x2 array with all elements 1

np.full((2,2),7) # 2x2 array with all elements 7

np.eye(2) # 2x2 identity matrix

np.arange(10) # Evenly spaced values in an interval

np.linspace(0,9,10) # same as above, see exercise

c = np.ones((3,3))

d = np.ones((3, 2), 'bool') # 3x2 boolean array

Arrays can also be stored and read from a (.npy) file (numpy.save(), numpy.load()):

np.save('x.npy', a) # save the array a to a .npy file

x = np.load('x.npy') # load an array from a .npy file and store it in variable x

In many occasions (especially when something goes different than expected) it is useful to check and control the datatype of the array (numpy.ndarray.dtype, numpy.ndarray.astype()):

d.dtype # datatype of the array

d.astype('int') # change datatype from boolean to integer

In the last example, .astype('int'), it will make a copy of the

array, and re-allocate data - unless the dtype is exactly the same as

before. Understanding and minimizing copies is one of the most

important things to do for speed.

Exercises 1

Exercises: Numpy-1

Datatypes Try out

np.arange(10)andnp.linspace(0,9,10), what is the difference? Can you adjust one to do the same as the other?Datatypes Create a 3x2 array of random float numbers (check

numpy.random.random()) between 0 and 1. Now change the arrays datatype to int (array.astype). How does the array look like?Reshape Create a 3x2 array of random integer numbers between 0 and 10. Change the shape of the array (check

array.reshape) in any way possible. What is not possible?NumPyI/O Save above array to .npy file (

numpy.save()) and read it in again.

Solutions: Numpy-1

Datatypes

np.arange(10)results inarray([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])with dtype int64,while

np.linspace(0,9,10)results inarray([0., 1., 2., 3., 4., 5., 6., 7., 8., 9.])with dtype float64.Both

np.linspaceandnp.arangetake dtype as an argument and can be adjusted to match each other in that way.

Datatypes eg

a = np.random.random((3,2)).a.astype('int')results in an all zero array, not as maybe expected the rounded int (all numbers [0, 1) are cast to 0).Reshape eg

b = np.random.randint(0,10,(3,2)).

b.reshape((6,1))andb.reshape((2,3))possible.It is not possible to reshape to shapes using more or less elements than

b.size = 6, so for exampleb.reshape((12,1))gives an error.

NumPyI/O

np.save('x.npy', b)andx = np.load('x.npy')

Array maths and vectorization

Clearly, you can do math on arrays. Math in NumPy is very fast because it is implemented in C or Fortran - just like most other high-level languages such as R, Matlab, etc do.

By default, basic arithmetic (+, -, *, /) in NumPy is

element-by-element. That is, the operation is performed for each element in the

array without you having to write a loop. We say an operation is “vectorized”

when the looping over elements is carried out by NumPy internally, which uses

specialized CPU instructions for this that greatly outperform a regular Python

loop.

Note that unlike Matlab, where * means matrix multiplication, NumPy uses

* to perform element-by-element multiplication and uses the @ symbol to

perform matrix multiplication:

a = np.array([[1,2],[3,4]])

b = np.array([[5,6],[7,8]])

# Addition

c = a + b

d = np.add(a,b)

# Matrix multiplication

e = a @ b

f = np.dot(a, b)

Other common mathematical operations include: - (numpy.subtract), * (numpy.multiply), / (numpy.divide), .T (numpy.transpose()), numpy.sqrt, numpy.sum(), numpy.mean(), …

Exercises 2

Exercises: Numpy-2

Create the following arrays:

# 1-dimensional arrays

x = np.array([1, 10, 100])

# TODO: similar to `x`, create another 1-dimensional array with shape (3,)

# y = np.array(...)

# 2-dimensional arrays

a = np.array([[1, 2], [3, 4]])

# TODO: similar to `a`, create another 2-dimensional array with shape (2, 2)

# b = np.array(...)

Matrix multiplication What is the difference between

numpy.multiplyandnumpy.dot()? Try calling these functions with eitherx, y(1D arrays) ora, b(2D arrays) as input and observe the behaviour.Axis What is the difference between

np.sum(a, axis=1)vsnp.sum(a, axis=0)on a two-dimensional array? What if you leave out the axis parameter?

Solutions: Numpy-2

Matrix multiplication

np.multiplydoes elementwise multiplication on two arrays. The functionnp.dotenables: - dot product and returns a scalar, when both input arrays are 1 dimensional - matrix multiplication and returns back a 2-dimensional array, when both the input arrays are 2 dimensional However,a @ bis preferred overnp.dot(a, b)to express matrix multiplication.Axis

axis=1does the operation (here:np.sum) over each row, while axis=0 does it over each column. If axis is left out, the sum of the full array is given.

Indexing and Slicing

See also

NumPy has many ways to extract values out of arrays:

You can select a single element

You can select rows or columns

You can select ranges where a condition is true.

Clever and efficient use of these operations is a key to NumPy’s speed: you should try to cleverly use these selectors (written in C) to extract data to be used with other NumPy functions written in C or Fortran. This will give you the benefits of Python with most of the speed of C.

a = np.arange(16).reshape(4, 4) # 4x4 matrix from 0 to 15

a[0] # first row

a[:,0] # first column

a[1:3,1:3] # middle 2x2 array

a[(0, 1), (1, 1)] # second element of first and second row as array

Boolean indexing on above created array:

idx = (a > 0) # creates boolean matrix of same size as a

a[idx] # array with matching values of above criterion

a[a > 0] # same as above in one line

Exercises 3

Exercise: Numpy-3

a = np.eye(4)

b = a[:, 0]

b[0] = 5

Try out the above code.

How does

alook like beforebhas changed and after?How could it be avoided?

Solution: Numpy-3

View vs copy: The change in b has also changed the array a!

This is because b is merely a view or a shallow copy of a part of array a. Both

variables point to the same memory. Hence, if one is changed, the other

one also changes.

In this example, if you need to keep the original array as is, use

np.copy(a) or np.copy(a[:, 0])

to create a new deep copy of the whole or a slice of array respectively,

before updating b.

See also

NumPy’s documentation on Copies and views

Types of operations

There are different types of standard operations in NumPy:

ufuncs, “universal functions”: These are element-by-element functions with standardized arguments:

One, two, or three input arguments

For example,

a + bis similar tonp.add(a, b)but the ufunc has more control.out=output argument, store output in this array (rather than make a new array) - saves copying data!See the full reference

They also do broadcasting (ref). Can you add a 1-dimensional array of shape (3) to an 2-dimensional array of shape (3, 2)? With broadcasting you can!

a = np.array([[1, 2, 3], [4, 5, 6]]) b = np.array([10, 10, 10]) a + b # array([[11, 12, 13], # [14, 15, 16]])

Broadcasting is smart and consistent about what it does, which I’m not clever enough to explain quickly here: the manual page on broadcasting. The basic idea is that it expands dimensions of the smaller array so that they are compatible in shape.

Array methods do something to one array:

Some of these are the same as ufuncs:

x = np.arange(12) x.shape = (3, 4) x # array([[ 0, 1, 2, 3], # [ 4, 5, 6, 7], # [ 8, 9, 10, 11]]) x.max() # 11 x.max(axis=0) # array([ 8, 9, 10, 11]) x.max(axis=1) # array([ 3, 7, 11])

Other functions: there are countless other functions covering linear algebra, scientific functions, etc.

Exercises 4

Exercises: Numpy-4

In-place addition: Create an array, add it to itself using a ufunc.

In-place addition (advanced): Create an array of

dtype='float', and an array ofdtype='int'. Try to use the int array as the output argument of the first two arrays.Output arguments and timing Repeat the initial

b = a ** 2example using a ufunc and time it. Can you make it even faster using the output argument?

Solution: Numpy-4

in-place addition:

x = np.array([1, 2, 3]) id(x) # get the memory-ID of x np.add(x, x, out=x) # Third argument is output array np.add(x, x, out=x) print(x) id(x) # get the memory-ID of x # - notice it is the same

Note that

np.add()writes the result to the output array (out=) and the function returns that same array.Output arguments and timing In this case, on my computer, it was actually slower (this is due to it being such a small array!):

a = np.arange(10_000) b = np.zeros(10_000)

%%timeit numpy.square(a, out=b)

This is a good example of why you always need to time things before deciding what is best.

Note: the

_inside numbers is just for human readability and is ignored by python.

Linear algebra and other advanced math

In general, you use arrays (n-dimensions), not matrixes

(specialized 2-dimensional) in NumPy.

Internally, NumPy doesn’t invent its own math routines: it relies on BLAS and LAPACK to do this kind of math - the same as many other languages.

Scipy has even more functions

Many other libraries use NumPy arrays as the standard data structure: they take data in this format, and return it similarly. Thus, all the other packages you may want to use are compatible

If you need to write your own fast code in C, NumPy arrays can be used to pass data. This is known as extending Python.

Additional exercises

Numpy-5

If you have extra time, try these out. These are advanced and optional, and will not be done in most courses.

Reverse a vector. Given a vector, reverse it such that the last element becomes the first, e.g.

[1, 2, 3]=>[3, 2, 1]Create a 2D array with zeros on the borders and 1 inside.

Create a random array with elements [0, 1), then add 10 to all elements in the range [0.2, 0.7).

What is

np.round(0.5)? What isnp.round(1.5)? Why?In addition to

np.round, explorenumpy.ceil,numpy.floor,numpy.trunc. In particular, take note of how they behave with negative numbers.Recall the identity \(\sin^2(x) + \cos^2(x) = 1\). Create a random 4x4 array with values in the range [0, 10). Now test the equality with

numpy.equal. What result do you get withnumpy.allclose()instead ofnp.equal?Create a 1D array with 10 random elements. Sort it.

What’s the difference between

np_array.sort()andnp.sort(np_array)?For the random array in question 8, instead of sorting it, perform an indirect sort. That is, return the list of indices which would index the array in sorted order.

Create a 4x4 array of zeros, and another 4x4 array of ones. Next combine them into a single 8x4 array with the content of the zeros array on top and the ones on the bottom. Finally, do the same, but create a 4x8 array with the zeros on the left and the ones on the right.

NumPy functionality Create two 2D arrays and do matrix multiplication first manually (for loop), then using the np.dot function. Use %%timeit to compare execution times. What is happening?

Solution Numpy-5

One solution is:

a = np.array([1, 2, 3]) a[::-1]

One solution is:

b = np.ones((10,10)) b[:,[0, -1]]=0 b[[0, -1],:]=0

A possible solution is:

x = np.random.rand(100) y = x + 10*(x >= 0.2)*(x < 0.7)

For values exactly halfway between rounded decimal values, NumPy rounds to the nearest even value.

Let’s test those functions with few negative and positive values:

a = np.array([-3.3, -2.5, -1.5, -0.75, -0.5, 0.5, 0.75, 1.5, 2.5, 3]) np.round(a) # [-3. -2. -2. -1. -0. 0. 1. 2. 2. 3.] np.ceil(a) # [-3. -2. -1. -0. -0. 1. 1. 2. 3. 3.] np.floor(a) # [-4. -3. -2. -1. -1. 0. 0. 1. 2. 3.] np.trunc(a) # [-3. -2. -1. -0. -0. 0. 0. 1. 2. 3.]

One solution is:

x = 10*np.random.rand(4,4) oo = np.ones((4,4)) s2c2 = np.square(np.sin(x))+np.square(np.cos(x)) np.equal(oo,s2c2) np.allclose(oo,s2c2)

Sorting the array itself, without copying it:

x = np.random.rand(10) x.sort()

NumPy.sort() returns a sorted copy of an array.

np.argsort(x)One solution is:

z = np.zeros((4,4)) o = np.ones((4,4)) np.concatenate((z,o)) np.concatenate((z,o),axis=1)

Using numpy without numpy functionality (np.dot) in this case, is still slow.

See also

Keypoints

NumPy is a powerful library every scientist using python should know about, since many other libraries also use it internally.

Be aware of some NumPy specific peculiarities

Advanced NumPy

Questions

How can NumPy be so fast?

Why are some things fast and some things slow?

How can I control whether NumPy makes a copy or operates in-place?

Objectives

Understand why NumPy has so many specialized functions for specific operations

Understand the underlying machinery of the Numpy

ndarrayobjectUnderstand when and why NumPy makes a copy of the data rather than a view

This is intended as a follow-up to the basic NumPy lesson. The intended audience for this advanced lesson is those who have used NumPy before and now want to learn how to get the most out of this amazing package.

Python, being an interpreted programming language, is quite slow. Manipulating large amounts of numbers using Python’s build-in lists would be impractically slow for any serious data analysis. Yet, the NumPy package can be really fast. How does it do that? We will dive into how NumPy works behind the scenes and use this knowledge to our advantage. This lesson also serves as an introduction to reading the definitive work on this topic: Guide to NumPy by Travis E. Oliphant, its initial creator.

NumPy can be really fast

Python, being an interpreted programming language, is quite slow. Manipulating large amounts of numbers using Python’s build-in lists would be impractically slow for any serious data analysis. Yet, the numpy package can be really fast.

How fast can NumPy be? Let’s race NumPy against C. The contest will be to sum together 100 000 000 random numbers. We will give the C version below, you get to write the NumPy version:

#include <stdlib.h>

#include <stdio.h>

#define N_ELEMENTS 100000000

int main(int argc, char** argv) {

double* a = (double*) malloc(sizeof(double) * N_ELEMENTS);

int i;

for(i=0; i<N_ELEMENTS; ++i) {

a[i] = (double) rand() / RAND_MAX;

}

double sum = 0;

for(i=0; i<N_ELEMENTS; ++i) {

sum += a[i];

}

printf("%f", sum);

return 0;

}

Exercise 1

Exercises: Numpy-Advanced-1

Write a Python script that uses NumPy to generate 100 million (100000000) random numbers and add them all together. Time how long it takes to execute. Can you beat the C version?

If you are having trouble with this, we recommend completing the basic NumPy lesson before continuing with this advanced lesson. If you are taking a live course - don’t worry, watch and learn and explore some during the exercises!

Solutions: Numpy-Advanced-1

The script can be implemented like this:

import numpy as np

print(np.random.rand(100_000_000).sum())

The libraries behind the curtain: MKL and BLAS

NumPy is fast because it outsources most of its heavy lifting to heavily optimized math libraries, such as Intel’s Math Kernel Library (MKL), which are in turn derived from a Fortran library called Basic Linear Algebra Subprograms (BLAS). BLAS for Fortran was published in 1979 and is a collection of algorithms for common mathematical operations that are performed on arrays of numbers. Algorithms such as matrix multiplication, computing the vector length, etc. The API of the BLAS library was later standardized, and today there are many modern implementations available. These libraries represent over 40 years of optimizing efforts and make use of specialized CPU instructions for manipulating arrays. In other words, they are fast.

One of the functions inside the BLAS library is a function to compute the “norm” of a vector, which is the same as computing its length, using the Pythagorean theorem: \(\sqrt(a[0]^2 + a[1]^2 + \ldots)\).

Let’s race the BLAS function versus a naive “manual” version of computing the vector norm. We start by creating a decently long vector filled with random numbers:

import numpy as np

rng = np.random.default_rng(seed=0)

a = rng.random(100_000_000)

We now implement the Pythagorean theorem using basic NumPy functionality and

use %%timeit to record how long it takes to execute:

%%timeit

l = np.sqrt(np.sum(a ** 2))

print(l)

And here is the version using the specialized BLAS function norm():

%%timeit

l = np.linalg.norm(a)

print(l)

NumPy tries to avoid copying data

Understanding the kind of operations that are expensive (take a long time) and

which ones are cheap can be surprisingly hard when it comes to NumPy. A big

part of data processing speed is memory management. Copying big arrays takes

time, so the less of that we do, the faster our code runs. The rules of when

NumPy copies data are not trivial and it is worth your while to take a closer

look at them. This involves developing an understanding of how NumPy’s

numpy.ndarray datastructure works behind the scenes.

An example: matrix transpose

Transposing a matrix means that all rows become columns and all columns become

rows. All off-diagonal values change places. Let’s see how long NumPy’s

transpose function takes, by transposing a huge (10 000 ✕ 20 000)

rand() matrix:

import numpy as np

a = np.random.rand(10_000, 20_000)

print(f'Matrix `a` takes up {a.nbytes / 10**6} MB')

Let’s time the transpose() method:

%%timeit

b = a.transpose()

It takes mere nanoseconds to transpose 1600 MB of data! How?

The ndarray exposed

The first thing you need to know about numpy.ndarray is that the

memory backing it up is always a flat 1D array. For example, a 2D matrix is

stored with all the rows concatenated as a single long vector.

NumPy is faking the second dimension behind the scenes! When we request the

element at say, [2, 3], NumPy converts this to the correct index in the

long 1D array [11].

Converting

[2, 3]→[11]is called “raveling”The reverse, converting

[11]→[2, 3]is called “unraveling”

The implications of this are many, so take let’s take some time to understand

it properly by writing our own ravel() function.

Exercise 2

Exercises: Numpy-Advanced-2

Write a function called ravel() that takes the row and column of an

element in a 2D matrix and produces the appropriate index in an 1D array,

where all the rows are concatenated. See the image above to remind yourself

how each row of the 2D matrix ends up in the 1D array.

The function takes these inputs:

rowThe row of the requested element in the matrix as integer index.

colThe column of the requested element in the matrix as integer index.

n_rowsThe total number of rows of the matrix.

n_colsThe total number of columns of the matrix.

Here are some examples of input and desired output:

ravel(2, 3, n_rows=4, n_cols=4)→11

ravel(2, 3, n_rows=4, n_cols=8)→19

ravel(0, 0, n_rows=1, n_cols=1)→0

ravel(3, 3, n_rows=4, n_cols=4)→15

ravel(3_465, 18_923, n_rows=10_000, n_cols=20_000)→69_318_923

Solutions: Numpy-Advanced-2

The function can be implemented like this:

def ravel(row, col, n_rows, n_cols):

return row * n_cols + col

Strides

As seen in the exercise, to get to the next row, we have to skip over

n_cols indices. To get to the next column, we can just add 1. To generalize

this code to work with an arbitrary number of dimensions, NumPy has the concept

of “strides”:

np.zeros((4, 8)).strides # (64, 8)

np.zeros((4, 5, 6, 7, 8)).strides # (13440, 2688, 448, 64, 8)

The strides attribute contains for each dimension, the number of bytes (not array indexes) we

have to skip over to get to the next element along that dimension. For example,

the result above tells us that to get to the next row in a 4 ✕ 8 matrix, we

have to skip ahead 64 bytes. 64? Yes! We have created a matrix consisting of

double-precision floating point numbers. Each one of those bad boys takes up 8

bytes, so all the indices are multiplied by 8 to get to the proper byte in the

memory array. To move to the next column in the matrix, we skip ahead 8 bytes.

So now we know the mystery behind the speed of transpose(). NumPy can avoid

copying any data by just modifying the strides of the array:

import numpy as np

a = np.random.rand(10_000, 20_000)

b = a.transpose()

print(a.strides) # (160000, 8)

print(b.strides) # (8, 160000)

Another example: reshaping

Modifying the shape of an array through numpy.reshape() is also

accomplished without any copying of data by modifying the strides:

a = np.random.rand(20_000, 10_000)

print(f'{a.strides=}') # (80000, 8)

b = a.reshape(40_000, 5_000)

print(f'{b.strides=}') # (40000, 8)

c = a.reshape(20_000, 5_000, 2)

print(f'{c.strides=}') # (80000, 16, 8)

Exercises 3

Exercises: Numpy-Advanced-3

A little known feature of NumPy is the numpy.stride_tricks module

that allows you to modify the strides attribute directly. Playing

around with this is very educational.

Create your own

transpose()function that will transpose a 2D matrix by reversing itsshapeandstridesattributes usingnumpy.lib.stride_tricks.as_strided().Create a (5 ✕ 100 000 000 000) array containing on the first row all 1’s, the second row all 2’s, and so on. Start with an 1D array

a = np.array([1., 2., 3., 4., 5.])and modify itsshapeandstridesattributes usingnumpy.lib.stride_tricks.as_strided()to obtain the desired 2D matrix:array([[1., 1., 1., ..., 1., 1., 1.], [2., 2., 2., ..., 2., 2., 2.], [3., 3., 3., ..., 3., 3., 3.], [4., 4., 4., ..., 4., 4., 4.], [5., 5., 5., ..., 5., 5., 5.]])

Solutions: Numpy-Advanced-3

The

transpose()function can be implemented like this:from numpy.lib.stride_tricks import as_strided def transpose(a): return as_strided(a, shape=a.shape[::-1], strides=a.strides[::-1]) # Testing the function on a small matrix a = np.array([[1, 2, 3], [4, 5, 6]]) print('Before transpose:') print(a) print('After transpose:') print(transpose(a))

By setting one of the

.stridesto 0, we can repeat a value infinitely many times without using any additional memory:from numpy.lib.stride_tricks import as_strided a = np.array([1., 2., 3., 4., 5.]) as_strided(a, shape=(5, 100_000_000_000), strides=(8, 0))

A fast thing + a fast thing = a fast thing?

If numpy.transpose() is fast, and numpy.reshape() is fast, then

doing them both must be fast too, right?:

# Create a large array

a = np.random.rand(10_000, 20_000)

Measuring the time it takes to first transpose and then reshape:

%%timeit -n 1 -r 1

a.T.reshape(40_000, 5_000)

In this case, the data actually had to be copied and it’s super slow (it takes

seconds instead of nanoseconds). When the array is first created, it is laid

out in memory row-by-row (see image above). The transpose left the data laid

out in memory column-by-column. To see why the copying of data was inevitable,

look at what happens to this smaller (2 ✕ 3) matrix after transposition and

reshaping. You can verify for yourself there is no way to get the final array

based on the first array and some clever setting of the strides:

a = np.array([[1, 2, 3], [4, 5, 6]])

print('Original array:')

print(a)

print('\nTransposed:')

print(a.T)

print('\nTransposed and then reshaped:')

print(a.T.reshape(2, 3))

Copy versus view

Whenever NumPy constructs a new array by modifying the strides instead of

copying data, we create a “view”. This also happens when we select only

a portion of an existing matrix. Whenever a view is created, the

numpy.ndarray object will have a reference to the original array in

its base attribute:

a = np.zeros((5, 5))

print(a.base) # None

b = a[:2, :2]

print(b.base.shape) # (5, 5)

Warning

When you create a large array and select only a portion of it, the large array will stay in memory if a view was created!

The new array b object has a pointer to the same memory buffer as the array

it has been derived from:

print(a.__array_interface__['data'])

print(b.__array_interface__['data'])

Views are created by virtue of modifying the value of the shape attribute

and, if necessary, apply an offset to the pointer into the memory buffer so it

no longer points to the start of the buffer, but somewhere in the middle:

b = a[1:3, 1:3] # This view does not start at the beginning

offset = b.__array_interface__['data'][0] - a.__array_interface__['data'][0]

print('Offset:', offset, 'bytes') # Offset: 48 bytes

Since the base array and its derived view share the same memory, any changes to the data in a view also affects the data in the base array:

b[0, 0] = 1.

print(a) # Original matrix was modified

Whenever you index an array, NumPy will attempt to create a view. Whether or not that succeeds depends on the memory layout of the array and what kind of indexing operation was done. If no view can be created, NumPy will create a new array and copy over the selected data:

c = a[[0, 2]] # Select rows 0 and 2

print(c.base) # None. So not a view.

See also

Keypoints

The best way to make your code more efficient is to learn more about the NumPy API and use specialized functions whenever possible.

NumPy will avoid copying data whenever it can. Whether it can depends on what kind of layout the data is currently in.

Pandas

Questions

How do I learn a new Python package?

How can I use pandas dataframes in my research?

Objectives

Learn simple and some more advanced usage of pandas dataframes

Get a feeling for when pandas is useful and know where to find more information

Understand enough of pandas to be able to read its documentation.

Pandas is a Python package that provides high-performance and easy to use data structures and data analysis tools. This page provides a brief overview of pandas, but the open source community developing the pandas package has also created excellent documentation and training material, including:

A Getting started guide (including tutorials and a 10 minute flash intro)

A “10 minutes to Pandas” tutorial

Thorough Documentation containing a user guide, API reference and contribution guide

A cookbook

A quick Pandas preview

Let’s get a flavor of what we can do with pandas (you won’t be able to follow everything yet). We will be working with an example dataset containing the passenger list from the Titanic, which is often used in Kaggle competitions and data science tutorials. First step is to load pandas:

import pandas as pd

We can download the data from this GitHub repository

by visiting the page and saving it to disk, or by directly reading into

a DataFrame:

url = "https://raw.githubusercontent.com/pandas-dev/pandas/master/doc/data/titanic.csv"

titanic = pd.read_csv(url, index_col='Name')

We can now view the dataframe to get an idea of what it contains and print some summary statistics of its numerical data:

# print the first 5 lines of the dataframe

titanic.head()

# print summary statistics for each column

titanic.describe()

Ok, so we have information on passenger names, survival (0 or 1), age, ticket fare, number of siblings/spouses, etc. With the summary statistics we see that the average age is 29.7 years, maximum ticket price is 512 USD, 38% of passengers survived, etc.

Let’s say we’re interested in the survival probability of different

age groups. With two one-liners, we can find the average age of those

who survived or didn’t survive, and plot corresponding histograms of

the age distribution (pandas.DataFrame.groupby(), pandas.DataFrame.hist()):

print(titanic.groupby("Survived")["Age"].mean())

titanic.hist(column='Age', by='Survived', bins=25, figsize=(8,10),

layout=(2,1), zorder=2, sharex=True, rwidth=0.9);

Clearly, pandas dataframes allows us to do advanced analysis with very few commands, but it takes a while to get used to how dataframes work so let’s get back to basics.

Getting help

Series and DataFrames have a lot functionality, but

how can we find out what methods are available and how they work? One way is to visit

the API reference

and reading through the list.

Another way is to use the autocompletion feature in Jupyter and type e.g.

titanic["Age"]. in a notebook and then hit TAB twice - this should open

up a list menu of available methods and attributes.

Jupyter also offers quick access to help pages (docstrings) which can be more efficient than searching the internet. Two ways exist:

Write a function name followed by question mark and execute the cell, e.g. write

titanic.hist?and hitSHIFT + ENTER.Write the function name and hit

SHIFT + TAB.Right click and select “Show contextual help”. This tab will update with help for anything you click.

What’s in a dataframe?

As we saw above, pandas dataframes are a powerful tool for working with tabular data.

A pandas

pandas.DataFrame

is composed of rows and columns:

Each column of a dataframe is a pandas.Series object

- a dataframe is thus a collection of series:

# print some information about the columns

titanic.info()

Unlike a NumPy array, a dataframe can combine multiple data types, such as

numbers and text, but the data in each column is of the same type. So we say a

column is of type int64 or of type object.

Let’s inspect one column of the Titanic passenger list data (first downloading and reading the titanic.csv datafile into a dataframe if needed, see above):

titanic["Age"]

titanic.Age # same as above

type(titanic["Age"]) # a pandas Series object

The columns have names. Here’s how to get them (columns):

titanic.columns

However, the rows also have names! This is what Pandas calls the index:

titanic.index

We saw above how to select a single column, but there are many ways of

selecting (and setting) single or multiple rows, columns and

values. We can refer to columns and rows either by their name

(loc, at) or by

their index (iloc,

iat):

titanic.loc['Lam, Mr. Ali',"Age"] # select single value by row and column

titanic.loc[:'Lam, Mr. Ali',"Survived":"Age"] # slice the dataframe by row and column *names*

titanic.iloc[0:2,3:6] # same slice as above by row and column *numbers*

titanic.at['Lam, Mr. Ali',"Age"] = 42 # set single value by row and column *name* (fast)

titanic.at['Lam, Mr. Ali',"Age"] # select single value by row and column *name* (fast)

titanic.iat[0,5] # select same value by row and column *number* (fast)

titanic["is_passenger"] = True # set a whole column

Dataframes also support boolean indexing, just like we saw for numpy

arrays:

titanic[titanic["Age"] > 70]

# ".str" creates a string object from a column

titanic[titanic.index.str.contains("Margaret")]

What if your dataset has missing data? Pandas uses the value numpy.nan

to represent missing data, and by default does not include it in any computations.

We can find missing values, drop them from our dataframe, replace them

with any value we like or do forward or backward filling:

titanic.isna() # returns boolean mask of NaN values

titanic.dropna() # drop missing values

titanic.dropna(how="any") # or how="all"

titanic.dropna(subset=["Cabin"]) # only drop NaNs from one column

titanic.fillna(0) # replace NaNs with zero

titanic.fillna(method='ffill') # forward-fill NaNs

Exercises 1

Exploring dataframes

Have a look at the available methods and attributes using the API reference or the autocomplete feature in Jupyter.

Try out a few methods using the Titanic dataset and have a look at the docstrings (help pages) of methods that pique your interest

Compute the mean age of the first 10 passengers by slicing and the

pandas.DataFrame.mean()method(Advanced) Using boolean indexing, compute the survival rate (mean of “Survived” values) among passengers over and under the average age.

Solution

Mean age of the first 10 passengers:

titanic.iloc[:10,:]["Age"].mean()

or:

titanic.loc[:"Nasser, Mrs. Nicholas (Adele Achem)","Age"].mean()

or:

titanic.iloc[:10,4].mean()

Survival rate among passengers over and under average age:

titanic[titanic["Age"] > titanic["Age"].mean()]["Survived"].mean()

and:

titanic[titanic["Age"] < titanic["Age"].mean()]["Survived"].mean()

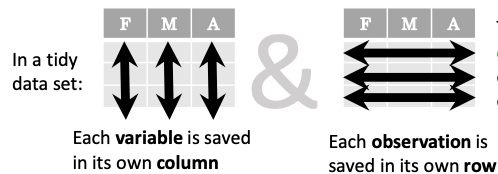

Tidy data

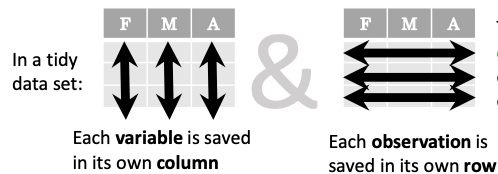

The above analysis was rather straightforward thanks to the fact that the dataset is tidy.

In short, columns should be variables and rows should be measurements, and adding measurements (rows) should then not require any changes to code that reads the data.

What would untidy data look like? Here’s an example from some run time statistics from a 1500 m running event:

runners = pd.DataFrame([

{'Runner': 'Runner 1', 400: 64, 800: 128, 1200: 192, 1500: 240},

{'Runner': 'Runner 2', 400: 80, 800: 160, 1200: 240, 1500: 300},

{'Runner': 'Runner 3', 400: 96, 800: 192, 1200: 288, 1500: 360},

])

What makes this data untidy is that the column names 400, 800, 1200, 1500 indicate the distance ran. In a tidy dataset, this distance would be a variable on its own, making each runner-distance pair a separate observation and hence a separate row.

To make untidy data tidy, a common operation is to “melt” it, which is to convert it from wide form to a long form:

runners = pd.melt(runners, id_vars="Runner",

value_vars=[400, 800, 1200, 1500],

var_name="distance",

value_name="time"

)

In this form it’s easier to filter, group, join and aggregate the data, and it’s also easier to model relationships between variables.

The opposite of melting is to pivot data, which can be useful to view data in different ways as we’ll see below.

For a detailed exposition of data tidying, have a look at this article.

Working with dataframes

We saw above how we can read in data into a dataframe using the read_csv() function.

Pandas also understands multiple other formats, for example using read_excel,

read_hdf, read_json, etc. (and corresponding methods to write to file:

to_csv, to_excel, to_hdf, to_json, etc.)

But sometimes you would want to create a dataframe from scratch. Also this can be done

in multiple ways, for example starting with a numpy array (see

DataFrame docs):

import numpy as np

dates = pd.date_range('20130101', periods=6)

df = pd.DataFrame(np.random.randn(6, 4), index=dates, columns=list('ABCD'))

df

or a dictionary (see same docs):

df = pd.DataFrame({'A': ['dog', 'cat', 'dog', 'cat', 'dog', 'cat', 'dog', 'dog'],

'B': ['one', 'one', 'two', 'three', 'two', 'two', 'one', 'three'],

'C': np.array([3] * 8, dtype='int32'),

'D': np.random.randn(8),

'E': np.random.randn(8)})

df

There are many ways to operate on dataframes. Let’s look at a few examples in order to get a feeling of what’s possible and what the use cases can be.

We can easily split and concatenate dataframes:

sub1, sub2, sub3 = df[:2], df[2:4], df[4:]

pd.concat([sub1, sub2, sub3])

When pulling data from multiple dataframes, a powerful pandas.DataFrame.merge method is

available that acts similarly to merging in SQL. Say we have a dataframe containing the age of some athletes:

age = pd.DataFrame([

{"Runner": "Runner 4", "Age": 18},

{"Runner": "Runner 2", "Age": 21},

{"Runner": "Runner 1", "Age": 23},

{"Runner": "Runner 3", "Age": 19},

])

We now want to use this table to annotate the original runners table from

before with their age. Note that the runners and age dataframes have a

different ordering to it, and age has an entry for Dave which is not

present in the runners table. We can let Pandas deal with all of it using

the merge method:

# Add the age for each runner

runners.merge(age, on="Runner")

In fact, much of what can be done in SQL is also possible with pandas.

groupby is a powerful method which splits a dataframe and aggregates data

in groups. To see what’s possible, let’s return to the Titanic dataset. Let’s

test the old saying “Women and children first”. We start by creating a new

column Child to indicate whether a passenger was a child or not, based on

the existing Age column. For this example, let’s assume that you are a

child when you are younger than 12 years:

titanic["Child"] = titanic["Age"] < 12

Now we can test the saying by grouping the data on Sex and then creating further sub-groups based on Child:

titanic.groupby(["Sex", "Child"])["Survived"].mean()

Here we chose to summarize the data by its mean, but many other common

statistical functions are available as dataframe methods, like

std, min,

max, cumsum,

median, skew,

var etc.

Exercises 2

Analyze the Titanic passenger list dataset

In the Titanic passenger list dataset, investigate the family size of the passengers (i.e. the “SibSp” column).

What different family sizes exist in the passenger list? Hint: try the

unique()methodWhat are the names of the people in the largest family group?

(Advanced) Create histograms showing the distribution of family sizes for passengers split by the fare, i.e. one group of high-fare passengers (where the fare is above average) and one for low-fare passengers (Hint: instead of an existing column name, you can give a lambda function as a parameter to

hist()to compute a value on the fly. For examplelambda x: "Poor" if df["Fare"].loc[x] < df["Fare"].mean() else "Rich").

Solution

Existing family sizes:

titanic["SibSp"].unique()

We get 8 from above. There is no

Namecolumn, since we madeNamethe index when we loaded the dataframe withread_csv, so we usepandas.DataFrame.indexto get the names. So, names of members of largest family(ies):titanic[titanic["SibSp"] == 8].index

Histogram of family size based on fare class:

titanic.hist("SibSp", lambda x: "Poor" if titanic["Fare"].loc[x] < titanic["Fare"].mean() else "Rich", rwidth=0.9)

Time series superpowers

An introduction of pandas wouldn’t be complete without mention of its special abilities to handle time series. To show just a few examples, we will use a new dataset of Nobel prize laureates available through an API of the Nobel prize organisation at https://api.nobelprize.org/v1/laureate.csv .

Unfortunately this API does not allow “non-browser requests”, so

pandas.read_csv will not work directly on it. Instead, we put a

local copy on Github which we can access (the original data is CC-0,

so we are allowed to do this). (Aside: if you do JupyterLab →

File → Open from URL → paste the URL above, it will open it in

JupyterLab and download a copy for your use.)

We can then load and explore the data:

nobel = pd.read_csv("https://github.com/AaltoSciComp/python-for-scicomp/raw/master/resources/data/laureate.csv")

nobel.head()

This dataset has three columns for time, “born”/”died” and “year”.

These are represented as strings and integers, respectively, and

need to be converted to datetime format. pandas.to_datetime()

makes this easy:

# the errors='coerce' argument is needed because the dataset is a bit messy

nobel["born"] = pd.to_datetime(nobel["born"], errors ='coerce')

nobel["died"] = pd.to_datetime(nobel["died"], errors ='coerce')

nobel["year"] = pd.to_datetime(nobel["year"], format="%Y")

Pandas knows a lot about dates (using .dt accessor):

print(nobel["born"].dt.day)

print(nobel["born"].dt.year)

print(nobel["born"].dt.weekday)

We can add a column containing the (approximate) lifespan in years rounded to one decimal:

nobel["lifespan"] = round((nobel["died"] - nobel["born"]).dt.days / 365, 1)

and then plot a histogram of lifespans:

nobel.hist(column='lifespan', bins=25, figsize=(8,10), rwidth=0.9)

Finally, let’s see one more example of an informative plot (boxplot())

produced by a single line of code:

nobel.boxplot(column="lifespan", by="category")

Exercises 3

Analyze the Nobel prize dataset

What country has received the largest number of Nobel prizes, and how many? How many countries are represented in the dataset? Hint: use the

describemethod on thebornCountryCodecolumn.Create a histogram of the age when the laureates received their Nobel prizes. Hint: follow the above steps we performed for the lifespan.

List all the Nobel laureates from your country.

Now more advanced steps:

Now define an array of 4 countries of your choice and extract only laureates from these countries (you need to look at the data and find how countries are written, and replace

COUNTRYwith those strings):countries = np.array([COUNTRY1, COUNTRY2, COUNTRY3, COUNTRY4]) subset = nobel.loc[nobel['bornCountry'].isin(countries)]Use

groupby()to compute how many nobel prizes each country received in each category. Thesize()method tells us how many rows, hence nobel prizes, are in each group:nobel.groupby(['bornCountry', 'category']).size()(Optional) Create a pivot table to view a spreadsheet like structure, and view it

First add a column “number” to the nobel dataframe containing 1’s (to enable the counting below). We need to make a copy of

subset, because right now it is only a view:subset = subset.copy() subset.loc[:, 'number'] = 1Then create the

pivot_table():table = subset.pivot_table( values="number", index="bornCountry", columns="category", aggfunc="sum" )(Optional) Install the

seabornvisualization library if you don’t already have it, and create a heatmap of your table:import seaborn as sns sns.heatmap(table,linewidths=.5);(Optional) Play around with other nice looking plots:

sns.violinplot(y=subset["year"].dt.year, x="bornCountry", inner="stick", data=subset);sns.swarmplot(y="year", x="bornCountry", data=subset, alpha=.5);subset_physchem = nobel.loc[ nobel['bornCountry'].isin(countries) & ( nobel['category'].isin(['physics']) | nobel['category'].isin(['chemistry']) ) ] sns.catplot( x="bornCountry", y="year", col="category", data=subset_physchem, kind="swarm" );sns.catplot(x="bornCountry", col="category", data=subset_physchem, kind="count");

Solution

Below is solutions for the basic steps, advanced steps are inline above.

We use the describe() method:

nobel.bornCountryCode.describe()

# count 969

# unique 82

# top US

# freq 292

We see that the US has received the largest number of Nobel prizes, and 82 countries are represented.

To calculate the age at which laureates receive their prize, we need to ensure that the “year” and “born” columns are in datetime format:

nobel["born"] = pd.to_datetime(nobel["born"], errors ='coerce')

nobel["year"] = pd.to_datetime(nobel["year"], format="%Y")

Then we add a column with the age at which Nobel prize was received and plot a histogram:

nobel["age_nobel"] = round((nobel["year"] - nobel["born"]).dt.days / 365, 1)

nobel.hist(column="age_nobel", bins=25, figsize=(8,10), rwidth=0.9)

We can print names of all laureates from a given country, e.g.:

nobel[nobel["country"] == "Sweden"].loc[:, "firstname":"surname"]

Beyond the basics

Faster expression evaluation with eval()

Larger DataFrame operations might be faster using eval() with string expressions (see

here).

To do so, we start by installing numexpr a Python library which optimizes such expressions:

%conda install numexpr

You may need to restart the kernel in Jupyter for this to be. Then:

import pandas as pd

import numpy as np

# Make some really big dataframes

nrows, ncols = 100000, 100

rng = np.random.RandomState(42)

df1, df2, df3, df4 = (pd.DataFrame(rng.rand(nrows, ncols))

for i in range(4))

Adding dataframes the pythonic way yields:

%timeit df1 + df2 + df3 + df4

# 80ms

And by using eval():

%timeit pd.eval('df1 + df2 + df3 + df4', engine='numexpr')

# 40ms

Assigning columns with apply()

We can assign function return lists as dataframe columns:

def fibo(n):

"""Compute Fibonacci numbers. Here we skip the overhead from the

recursive function calls by using a list. """

if n < 0:

raise NotImplementedError('Not defined for negative values')

elif n < 2:

return n

memo = [0]*(n+1)

memo[0] = 0

memo[1] = 1

for i in range(2, n+1):

memo[i] = memo[i-1] + memo[i-2]

return memo

df = pd.DataFrame({'Generation': np.arange(100)})

df['Number of Rabbits'] = fibo(99) # Assigns list to column

There is much more to Pandas than what we covered in this lesson. Whatever your

needs are, chances are good there is a function somewhere in its API. You should try to get good at

searching the web for an example showing what you can do. And when

there is not, you can always

apply your own functions to the data using apply:

from functools import lru_cache

@lru_cache

def fib(x):

"""Compute Fibonacci numbers. The @lru_cache remembers values we

computed before, which speeds up this function a lot."""

if x < 0:

raise NotImplementedError('Not defined for negative values')

elif x < 2:

return x

else:

return fib(x - 2) + fib(x - 1)

df = pd.DataFrame({'Generation': np.arange(100)})

df['Number of Rabbits'] = df['Generation'].apply(fib)

Note that the numpy precision for integers caps at int64 while python ints are unbounded –

limited by memory size. Thus, the result from fibonacci(99) would be erroneous when

using numpy ints. The type of df['Number of Rabbits'][99] given by both functions above

is in fact <class 'int'>.

See also

Modern Pandas (2020) – a blog series on writing modern idiomatic pandas.

Python Data Science Handbook (2016) – which contains a chapter on Data Manipulation with Pandas.

Alternatives to Pandas

Polars

Polars is a DataFrame library designed to processing data with a fast lighting time. Polars is implemented in Rust Programming language and uses Apache Arrow as its memory format.

Dask

Dask is a Python package for parallel computing in Python and uses parallel data-frames for dealing with very large arrays.

Vaex

Vaex is a high performance Python library for lazy Out-of-Core DataFrames, to visualize and explore big tabular datasets.

Keypoints

pandas dataframes are a good data structure for tabular data

Dataframes allow both simple and advanced analysis in very compact form

Xarray

Questions

How shall we deal with real-world datasets that are usually more than just raw numbers?

What is the advantage of using labelled multidimensional arrays?

What does Xarray add to Numpy and Pandas to address these questions?

Objectives

Learn how to apply operations over dimensions and select values by label

Understand Xarray’s DataArrays and Datasets

Learn how to easily plot data in Xarray

Learn how to turn your own data into an Xarray Dataset

We have already seen how Pandas simplifies working with tabular NumPy data by adding labels to columns and rows. In this lesson, we take a look at how xarray can be used to add the same functionality to multidimensional data. Let’s consider the following example:

Imagine we have a dataset representing temperature measurements across different heights, latitudes, and longitudes. We can store the temperature data as a 3D NumPy array where each axis corresponds to one of these dimensions:

import numpy as np

# Create a 3D numpy array: height x latitude x longitude

data = np.random.rand(10, 5, 5) # 10 heights, 5 latitudes, 5 longitudes

Let’s assume now we want to take a look at a specific value in the dataset at a certain height, latitude, and longitude. We could do this by indexing the array with the corresponding indices:

# Get the temperature at height 3, latitude 2, longitude 4

temperature = data[3, 2, 4]

OK, we got a value, but how do we know whether this value corresponds to the correct height, latitude and longitude? Are we sure that latitude was the second dimension in the dataset? Was it the second or third index that corresponds to the correct position? In pure NumPy, we are mostly left in the dark and need to manually keep track of these things.

Unfortunately, Pandas isn’t of much help either since it is not designed for data with more than 2 dimensions. Fortunately, some clever climate scientists have come up with a solution to this problem and created Xarray.

What is Xarray?

Xarray is a powerful Python library that introduces labelled multidimensional arrays. This means the axes have labels (=dimensions), each row/column has a label (coordinates), and labels can even have units of measurement. This makes it much easier to follow what the data in an array means and select specific portions of data.

We will first download a dataset similar to the example above to illustrate the advantages of Xarray. We will cover how to transform your own data into an Xarray Dataset later in this lecture.

Let us open a python shell and download a public dataset:

>>> from pythia_datasets import DATASETS

>>> filepath = DATASETS.fetch('NARR_19930313_0000.nc')

We can now import xarray and open the dataset. Le’ts take a look at what it contains:

>>> import xarray as xr

>>> ds = xr.open_dataset(filepath)

>>> ds

Output:

<xarray.Dataset> Size: 15MB

Dimensions: (time1: 1, isobaric1: 29, y: 119, x: 268)

Coordinates:

* time1 (time1) datetime64[ns] 8B 1993-03-13

* isobaric1 (isobaric1) float32 116B 100.0 125.0 ... 1e+03

* y (y) float32 476B -3.117e+03 ... 714.1

* x (x) float32 1kB -3.324e+03 ... 5.343e+03

Data variables:

u-component_of_wind_isobaric (time1, isobaric1, y, x) float32 4MB ...

LambertConformal_Projection int32 4B ...

lat (y, x) float64 255kB ...

lon (y, x) float64 255kB ...

Geopotential_height_isobaric (time1, isobaric1, y, x) float32 4MB ...

v-component_of_wind_isobaric (time1, isobaric1, y, x) float32 4MB ...

Temperature_isobaric (time1, isobaric1, y, x) float32 4MB ...

Attributes:

Originating_or_generating_Center: US National Weather Service, Nation...

Originating_or_generating_Subcenter: North American Regional Reanalysis ...

GRIB_table_version: 0,131

Generating_process_or_model: North American Regional Reanalysis ...

Conventions: CF-1.6

history: Read using CDM IOSP GribCollection v3

featureType: GRID

History: Translated to CF-1.0 Conventions by...

geospatial_lat_min: 10.753308882144761

geospatial_lat_max: 46.8308828962289

geospatial_lon_min: -153.88242040519995

geospatial_lon_max: -42.666108129242815

That was a lot of information at once, but let’s break it down.

Close to the top of the output we see the

Dimensionsof the dataset:time1,isobaric1,y, andx.Below the dimensions, we see the

Coordinatesof the dataset. These are for each dimension the labels for each value along that dimension. For example, we have a timestamp of each value along the first dimension (time1).The

Data variablesare the actual data stored in the dataset. We see that the dataset contains a bunch of arrays, most of which are 4-dimensional, where each dimension corresponds to one of theDimensionsdescribed above. There are also some 2-dimensional arrays that only have some of theDimensionsdescribed above.At the bottom, we see the

Attributesof the dataset. This is a dictionary that stores metadata about the dataset.

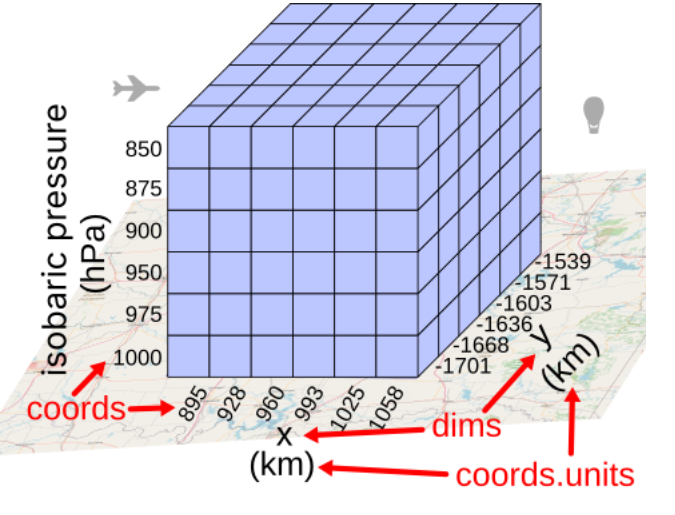

The following image shows the structure of this particular Xarray Dataset:

Accessing and manipulating data in Xarray

An xarray Dataset typically consists of multiple DataArrays. Our example dataset has 7 of them (u-component_of_wind_isobaric, LambertConformal_Projection, lat, lon, Geopotential_height_isobaric, v-component_of_wind_isobaric, Temperature_isobaric).

We can select a single DataArray from the dataset using a dictionary-like syntax:

>>> temperature_data = ds['Temperature_isobaric']

>>> temperature_data

Output:

<xarray.DataArray 'Temperature_isobaric' (time1: 1, isobaric1: 29, y: 119,

x: 268)> Size: 4MB

[924868 values with dtype=float32]

Coordinates:

* time1 (time1) datetime64[ns] 8B 1993-03-13

* isobaric1 (isobaric1) float32 116B 100.0 125.0 150.0 ... 950.0 975.0 1e+03

* y (y) float32 476B -3.117e+03 -3.084e+03 -3.052e+03 ... 681.6 714.1

* x (x) float32 1kB -3.324e+03 -3.292e+03 ... 5.311e+03 5.343e+03

Attributes:

long_name: Temperature @ Isobaric surface

units: K

description: Temperature

grid_mapping: LambertConformal_Projection

Grib_Variable_Id: VAR_7-15-131-11_L100

Grib1_Center: 7

Grib1_Subcenter: 15

Grib1_TableVersion: 131

Grib1_Parameter: 11

Grib1_Level_Type: 100

Grib1_Level_Desc: Isobaric surface

Xarray uses Numpy(-like) arrays under the hood, we can always access the underlying Numpy array using the .values attribute:

>>> temperature_numpy = ds['Temperature_isobaric'].values

>>> temperature_numpy

Output:

array([[[[201.88957, 202.2177 , 202.49895, ..., 195.10832, 195.23332,

195.37395],

[201.68645, 202.0302 , 202.3427 , ..., 195.24895, 195.38957,

195.51457],

[201.5302 , 201.87395, 202.20207, ..., 195.37395, 195.51457,

195.63957],

...,

[276.735 , 276.70374, 276.6881 , ..., 289.235 , 289.1725 ,

289.07874],

[276.86 , 276.84436, 276.78186, ..., 289.1881 , 289.11 ,

289.01624],

[277.01624, 276.82874, 276.82874, ..., 289.14124, 289.0475 ,

288.96936]]]], dtype=float32)

Xarray allows you to select data using the .sel() method, which uses the labels of the dimensions to extract data:

>>> ds['Temperature_isobaric'].sel(x='-3292.0078')

By default, you need to enter the exact coordinate, but often we want to select the closest value to some number. For this, you can use method='nearest':

>>> ds['Temperature_isobaric'].sel(x='-3292', method='nearest')

Output:

<xarray.DataArray 'Temperature_isobaric' (time1: 1, isobaric1: 29, y: 119)> Size: 14kB

array([[[202.2177 , 202.0302 , ..., 219.67082, 219.74895],

[202.58566, 202.58566, ..., 219.16379, 219.28879],

...,

[292.1622 , 292.14658, ..., 275.05283, 275.11533],

[294.1256 , 294.14124, ..., 276.84436, 276.82874]]], dtype=float32)

Coordinates:

* time1 (time1) datetime64[ns] 8B 1993-03-13

* isobaric1 (isobaric1) float32 116B 100.0 125.0 150.0 ... 950.0 975.0 1e+03

* y (y) float32 476B -3.117e+03 -3.084e+03 -3.052e+03 ... 681.6 714.1

x float32 4B -3.292e+03

Attributes:

long_name: Temperature @ Isobaric surface

units: K

description: Temperature

grid_mapping: LambertConformal_Projection

Grib_Variable_Id: VAR_7-15-131-11_L100

Grib1_Center: 7

Grib1_Subcenter: 15

Grib1_TableVersion: 131

Grib1_Parameter: 11

Grib1_Level_Type: 100

Grib1_Level_Desc: Isobaric surface

We can also access the same data by index using the .isel() method:

>>> ds['Temperature_isobaric'].isel(x=1)

Output:

<xarray.DataArray 'Temperature_isobaric' (time1: 1, isobaric1: 29, y: 119)> Size: 14kB

array([[[202.2177 , 202.0302 , ..., 219.67082, 219.74895],

[202.58566, 202.58566, ..., 219.16379, 219.28879],

...,

[292.1622 , 292.14658, ..., 275.05283, 275.11533],

[294.1256 , 294.14124, ..., 276.84436, 276.82874]]], dtype=float32)

Coordinates:

* time1 (time1) datetime64[ns] 8B 1993-03-13

* isobaric1 (isobaric1) float32 116B 100.0 125.0 150.0 ... 950.0 975.0 1e+03

* y (y) float32 476B -3.117e+03 -3.084e+03 -3.052e+03 ... 681.6 714.1

x float32 4B -3.292e+03

Attributes:

long_name: Temperature @ Isobaric surface

units: K

description: Temperature

grid_mapping: LambertConformal_Projection

Grib_Variable_Id: VAR_7-15-131-11_L100

Grib1_Center: 7

Grib1_Subcenter: 15

Grib1_TableVersion: 131

Grib1_Parameter: 11

Grib1_Level_Type: 100

Grib1_Level_Desc: Isobaric surface

A DataArray provides a lot of the functionality we expect from Numpy arrays, such as sum(), mean(), median(), min(), and max() that we can use these methods to aggregate data over one or multiple dimensions:

>>> # Calculate the mean over the 'isobaric1' dimension

>>> ds['Temperature_isobaric'].mean(dim='isobaric1')

Output:

<xarray.DataArray 'Temperature_isobaric' (time1: 1, y: 119, x: 268)> Size: 128kB

array([[[259.88446, 259.90222, 259.91678, ..., 262.61667, 262.6285 ,

262.65167],

[259.74866, 259.76752, 259.78638, ..., 262.5757 , 262.58218,

262.57516],

[259.6156 , 259.63498, 259.65115, ..., 262.52075, 262.51215,

262.4976 ],

...,

[249.8796 , 249.83649, 249.79501, ..., 254.43617, 254.49059,

254.54985],

[249.8505 , 249.80202, 249.75244, ..., 254.37044, 254.42378,

254.47711],

[249.82195, 249.75998, 249.71204, ..., 254.30956, 254.35805,

254.41139]]], dtype=float32)

Coordinates:

* time1 (time1) datetime64[ns] 8B 1993-03-13

* y (y) float32 476B -3.117e+03 -3.084e+03 -3.052e+03 ... 681.6 714.1

* x (x) float32 1kB -3.324e+03 -3.292e+03 ... 5.311e+03 5.343e+03

Let’s take a look at a concrete example and compare it to NumPy. We will calculate the max temperature over the ‘isobaric1’ dimension at a specific value for x:

>>> # Xarray

>>> ds['Temperature_isobaric'].sel(x='-3259.5447').max(dim='isobaric1')

Output:

array([[294.11 , 294.14124, 294.1256 , 294.0475 , 293.90686, 293.6256 ,

...,

276.46936, 276.59436, 276.6881 , 276.78186, 276.82874]],

dtype=float32)

In comparison, if we were to use plain Numpy, this would be:

>>> # NumPy

>>> np.max(temperature_numpy[:, :, :, 2 ], axis = 1)

Output:

array([[294.11 , 294.14124, 294.1256 , 294.0475 , 293.90686, 293.6256 ,

...,

276.46936, 276.59436, 276.6881 , 276.78186, 276.82874]],

dtype=float32)